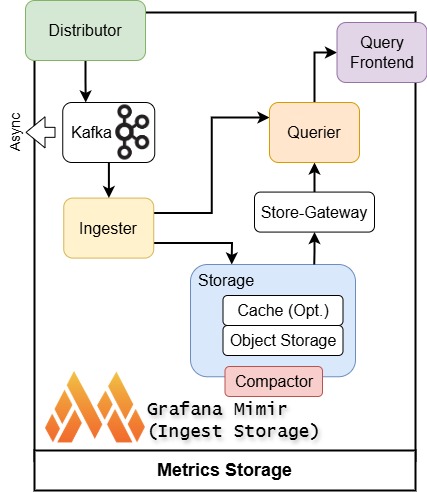

Grafana Mimir is an open source, horizontally scalable time series database designed for long-term storage of Prometheus metrics. Built on a microservices architecture, Mimir provides high availability and multi-tenancy while maintaining compatibility with Prometheus’ remote write API.

Core Architecture

Mimir follows a microservices-based design where all components are compiled into a single binary. The (-target) parameter determines which component(s) the binary runs, allowing for flexible deployment patterns. For simpler deployments, Mimir can run in monolithic mode (all components in one process) or read-write mode (components grouped by function).

The architecture consists of stateless and stateful components. Most are stateless, requiring no persistent data between restarts, while stateful components like ingesters rely on non-volatile storage to prevent data loss.

Stateful ingesters with local write-ahead logs to manage ingestion of new data and serving recent data for queries

Kafka as a central ingest pipe to decouple the read and write operations

The Write Path

Data flows through Mimir’s write path as follows:

- Distributors receive incoming samples from Prometheus instances via the remote write API. These HTTP PUT requests contain Snappy-compressed Protocol Buffer messages, with each request tagged with a tenant ID for multi-tenancy support.

- Ingesters receive samples from distributors and append them to per-tenant Time Series Databases (TSDB) stored on local disk. Samples are simultaneously kept in-memory and written to a write-ahead log (WAL) for recovery purposes. The WAL is particularly important—it should be stored on persistent disk (like AWS EBS or GCP Persistent Disk) to survive ingester failures.

Every two hours by default, in-memory samples are flushed to disk as TSDB blocks, and the WAL is truncated. These blocks are then uploaded to long-term object storage and retained locally for a configurable period to allow queriers and store-gateways time to discover them.

Replication and Compaction

By default, each time series is replicated to three ingesters, with each writing its own block to long-term storage. The Compactor merges these blocks from multiple ingesters, removing duplicate samples and significantly reducing storage utilization.

The Read Path

Queries follow a different path optimized for performance:

- The query-frontend receives incoming queries and splits longer time-range queries into smaller chunks

- It checks the results cache to return cached data when possible

- Non-cached queries are placed in an in-memory queue (or in the optional query-scheduler)

- Queriers act as workers, pulling queries from the queue

- Queriers fetch data from both store-gateways (for long-term storage) and ingesters (for recent data)

- Results are returned to the query-frontend for aggregation before being sent to the client

Storage Format

Mimir’s storage format is based on Prometheus TSDB. Each tenant gets their own TSDB, with data persisted in on-disk blocks covering two hour ranges by default. Blocks contain:

- An index file mapping metric names and labels to time series

- Metadata files

- Time series chunks (typically storing ~120 samples per chunk)

Long-term storage requires an object store backend: Amazon S3, Google Cloud Storage, Azure Storage, OpenStack Swift, or local filesystem (single-node only).

Why This Architecture Matters

Mimir’s design addresses key challenges in metrics storage: horizontal scalability, multi-tenancy, high availability through replication, and cost-effective long term storage through block compaction. The separation of read and write paths allows independent scaling based on workload patterns, while the use of object storage backends makes it practical to retain metrics for extended periods without prohibitive infrastructure costs.

For teams running Prometheus at scale, Mimir provides a production-ready path to centralized, long term metrics storage without sacrificing the Prometheus query language or operational model.

Leave a reply to Grafana Observability Stack Overview – Ross McNeely Cancel reply