This is the second post in my series on scoping data and AI projects for success, based on insights from over 80+ real-world engagements.

Picture this: Your team has crafted the perfect problem statement, stakeholders are aligned on objectives, and everyone’s excited about the transformative AI solution you’re about to build. Three months later, the project stalls because the “high-quality customer database” turned out to have 40% missing email addresses, and those “comprehensive product documents” are actually a mix of outdated PDFs, corrupted scans, and files that can’t be processed by your chosen AI tools.

Sound familiar? This scenario plays out countless times across organizations because teams rush past one of the most critical scoping activities: thoroughly identifying data sources and assessing their quality. In successful data and AI projects, this isn’t just a checkbox. It’s the foundation that determines whether your scope is ambitious or delusional.

Why Data Quality Assessment Makes or Breaks Your Scope

When scoping AI projects, teams often fall into the “field of dreams” trap: if we build it, good data will come. But here’s the reality from 80+ project engagements, your project scope must be shaped by your data reality, not your data aspirations.

Quality assessment isn’t about finding perfect data. It’s about understanding your constraints well enough to set realistic boundaries around what you can deliver, when you can deliver it, and what resources you’ll need to get there.

The most successful projects don’t necessarily use the cleanest data. They use data whose quality characteristics are thoroughly understood and appropriately matched to project objectives. This alignment between data reality and project scope creates the foundation for delivering genuine business value rather than technical disappointments.

A Data Detective Framework: Steps to Quality-Driven Scoping

1. Map Your Complete Data Landscape for your Project

Start by casting a wide net beyond the obvious data sources. Your scoping success depends on understanding the full ecosystem of available information:

Internal Assets: Core databases, data warehouses, operational systems, and often overlooked departmental spreadsheets that might contain the most current insights.

External Sources: Third-party datasets, APIs, public repositories, and vendor-provided information that could enhance your project but come with their own access and quality constraints.

Shadow Data: Those unofficial databases and local files that every organization has and they’re often the most relevant but least standardized sources.

Document Collections: PDFs, Word documents, emails, reports, presentations, and scanned materials that contain valuable unstructured information but require specialized processing approaches.

The key insight: Your project scope must account for data integration complexity. Five high-quality sources might be more valuable than twenty mediocre ones if integration effort is considered.

2. Leverage Data Quality Frameworks

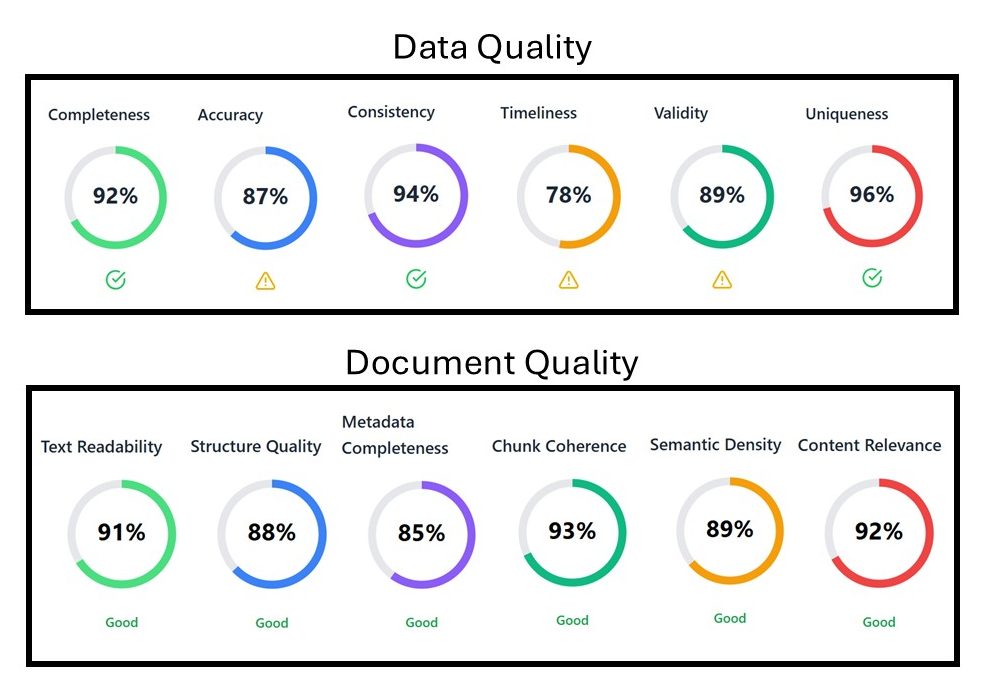

Systematic quality assessment evaluates every source across six critical dimensions:

Data Quality Metrics: The Foundations of AI Success Part I: Why Data Quality Metrics Are Critical for AI Solutions – Ross McNeely

Completeness: What percentage of critical fields are populated? Missing data isn’t always a project killer, but it must inform your scope decisions. Accuracy: How well does the data reflect real-world conditions? Outdated customer addresses might be fine for demographic analysis but useless for direct marketing. Consistency: Are formats and values standardized across sources? Inconsistent date formats or naming conventions can consume significant project resources. Timeliness: Is the data fresh enough for your use case? Yesterday’s stock prices are historical data; yesterday’s fraud alerts are actionable intelligence. Validity: Do values conform to expected ranges and business rules? Invalid entries often reveal systematic data collection issues. Uniqueness: Are duplicate records creating bias or artificial inflation of your dataset?

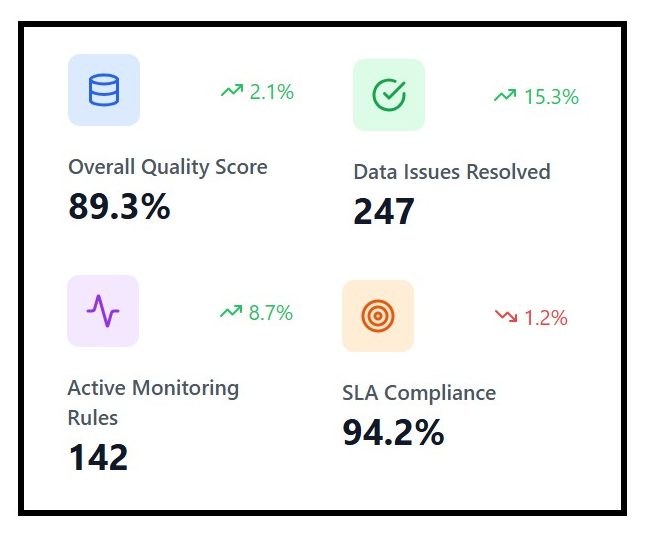

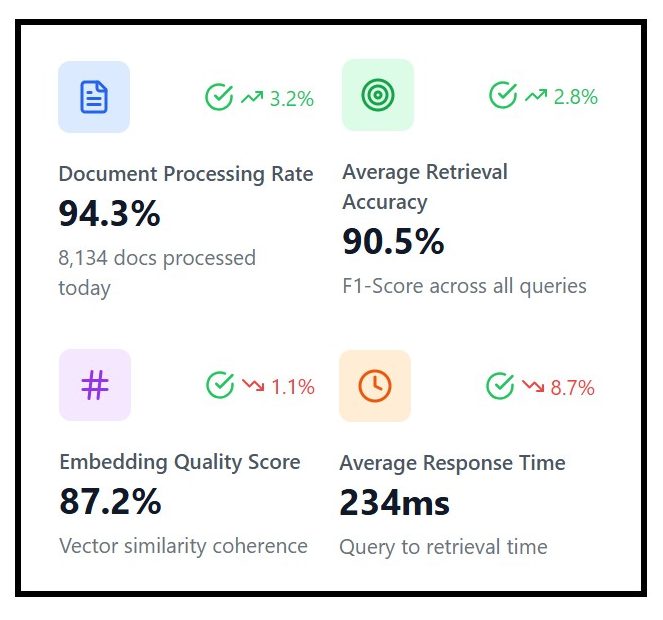

Data Quality Tracking: Data Quality Metrics Schema – Ross McNeely

3. Tackle the Document Quality Challenge

As AI projects increasingly work with unstructured content, document quality assessment becomes equally critical to project scoping:

Document Quality Metrics: The Foundations of AI Success Part II: Why Document and Content Management are Critical for AI Solutions – Ross McNeely

Format Assessment: Can your chosen AI tools process the document formats in your collection? A scope that assumes clean text extraction from poor-quality scanned PDFs is headed for trouble. Content Integrity: Are document collections complete? Missing pages, corrupted files, or version control issues can introduce biases that compound throughout your project. Metadata Richness: Creation dates, authors, classifications, and other contextual information often provide as much value as document content. Projects frequently fail when this context isn’t preserved or accessible. Language and Structure Consistency: Mixing formal reports with casual emails, or combining documents in multiple languages, requires different processing approaches that impact project complexity and timelines.

5. Create Quality-Informed Scope Boundaries

The most valuable outcome of quality assessment is often recognizing what you can’t do with current data assets. Quality constraints should inform scope decisions early, before teams invest effort in unrealistic objectives:

Impact vs. Effort Analysis: Which quality improvements are essential for project success versus nice-to-have enhancements? This prioritization directly shapes your project scope and resource requirements.

Fallback Source Planning: When primary data sources don’t meet quality thresholds, having alternatives identified prevents scope creep and timeline delays.

Integration Readiness Assessment: Schema misalignment or incompatible document formats often require significant preprocessing that must be reflected in project timelines and resource allocation.

6. Establish Quality Monitoring and Communication Plans

Quality assessment isn’t a one-time scoping activity—it’s an ongoing project requirement:

Quality Heat Maps: Visual tools that communicate data and document quality status to stakeholders in business-friendly formats. Monitoring Dashboards: Ongoing quality surveillance for dynamic data sources that might degrade over time. Stakeholder Communication Protocols: Clear processes for discussing quality-related scope changes as new information emerges during project execution.

Practical Scoping Outcomes: From Assessment-to-Action Effective data and document quality assessment drives specific scoping decisions:

Realistic Timeline Estimates: Based on actual data conditions rather than optimistic assumptions about “cleaning up the data quickly.” Clear Resource Requirements: Understanding quality improvement needs helps accurately scope data engineering, preprocessing, and validation efforts. Transparent Stakeholder Expectations: Quality constraints become part of scope discussions, preventing unrealistic expectations about what insights or capabilities are achievable. Risk Mitigation Strategies: Known quality issues can be addressed proactively rather than becoming project surprises that derail timelines and budgets.

The Competitive Advantage of Quality-First Scoping

Organizations that invest in thorough data and document quality assessment during project scoping gain significant advantages:

- Projects deliver realistic value rather than over-promising and under-delivering

- Teams spend time solving business problems instead of fighting data issues

- Stakeholders maintain confidence because project outcomes align with scoped expectations

- Technical resources focus on value-creating activities rather than emergency data remediation

Making Quality Assessment Actionable in Your Next Project

As you scope your next data or AI initiative, remember that quality assessment isn’t about achieving perfection. It’s about understanding your constraints well enough to make informed decisions about project scope, timeline, and expected outcomes.

The most successful projects align data reality with business objectives through thorough quality assessment during scoping. This upfront investment in understanding your data landscape pays dividends throughout the entire project lifecycle.

Leave a reply to Scoping Data and AI Projects – Ross McNeely Cancel reply