AI Systems and Their Performance Measurements

Link to AI Quality Blog Post: The Foundation of AI Success Part III: Why AI Quality Metrics Are Critical for AI Solutions – Ross McNeely

- AI systems generate performance measurements (like accuracy scores, response times, or error rates)

- Each individual measurement comes from exactly one AI system – measurements aren’t shared between systems

- A single AI system can produce multiple different measurements over time

AI Systems and Quality Evaluations

- AI systems are evaluated using quality scorecards (assessment tools that measure performance, reliability, safety, etc.)

- Each quality scorecard is dedicated to evaluating exactly one AI system – scorecards aren’t shared between systems

- A single AI system can be assessed using multiple different quality scorecards

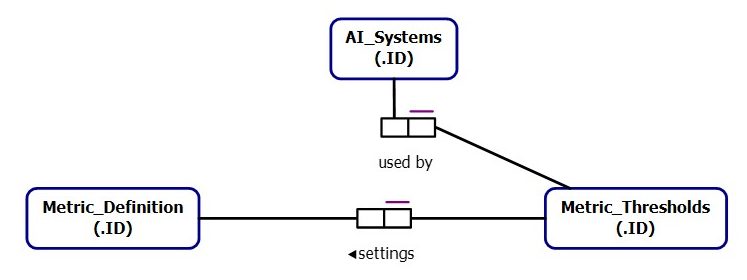

AI Systems, Performance Thresholds, and Metric Definitions

Relationship 1: AI Systems and Their Alert Thresholds

- AI systems have performance thresholds set for them (like “response time must be under 2 seconds” or “accuracy must be above 95%”)

- Each threshold setting applies to exactly one AI system – thresholds aren’t shared between systems

- A single AI system can have multiple different threshold settings for various performance aspects

Relationship 2: Thresholds and What They Measure

- Each threshold setting is based on a specific metric definition (the rules for what gets measured and how)

- Each threshold uses exactly one metric definition – you can’t mix different measurement types in one threshold

- The same metric definition can be used by multiple different thresholds across various systems

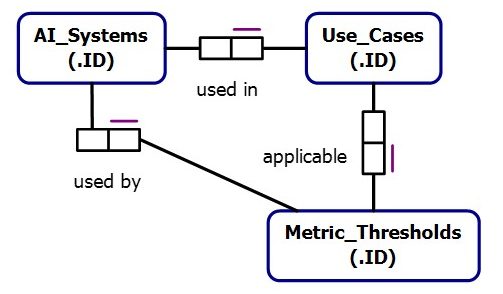

AI Systems, Business Use Cases, and Performance Standards

Relationship 1: AI Systems and Their Applications

- AI systems are deployed for specific business use cases (like “customer service chatbot,” “fraud detection,” or “document processing”)

- Each use case is powered by exactly one AI system – use cases don’t share AI systems

- A single AI system can be used across multiple different business use cases

Relationship 2: Use Cases and Their Performance Requirements

- Each business use case has specific performance thresholds that must be met (like response time limits or accuracy requirements)

- Each performance threshold applies to exactly one use case – thresholds are customized per use case

- A single use case can have multiple different performance thresholds covering various aspects of quality

AI Quality Metrics (FULL) Schema generated by Claude.ai

-- AI Metrics Tracking Database Schema

-- Complete DDL for tracking 80+ AI quality metrics

-- =====================================================

-- CORE CONFIGURATION TABLES

-- =====================================================

-- AI Systems/Models Registry

CREATE TABLE ai.ai_systems (

system_id INT IDENTITY(1,1) PRIMARY KEY,

system_name NVARCHAR(100) NOT NULL,

system_type NVARCHAR(50) NOT NULL, -- 'LLM', 'Vision', 'Multimodal', etc.

version NVARCHAR(20) NOT NULL,

deployment_environment NVARCHAR(20) NOT NULL, -- 'Production', 'Staging', 'Test'

created_date DATETIME2 DEFAULT GETDATE(),

is_active BIT DEFAULT 1,

description NVARCHAR(500),

CONSTRAINT UK_ai_systems_name_version UNIQUE (system_name, version, deployment_environment)

);

-- Metric Categories and Definitions

CREATE TABLE ai.metric_categories (

category_id INT IDENTITY(1,1) PRIMARY KEY,

category_name NVARCHAR(100) NOT NULL,

category_description NVARCHAR(500),

display_order INT DEFAULT 0,

is_active BIT DEFAULT 1

);

CREATE TABLE ai.metric_definitions (

metric_id INT IDENTITY(1,1) PRIMARY KEY,

metric_name NVARCHAR(100) NOT NULL,

category_id INT NOT NULL,

metric_type NVARCHAR(20) NOT NULL, -- 'NUMERIC', 'PERCENTAGE', 'BOOLEAN', 'TEXT'

data_type NVARCHAR(20) NOT NULL, -- 'FLOAT', 'INT', 'BIT', 'VARCHAR'

min_value FLOAT NULL,

max_value FLOAT NULL,

unit_of_measure NVARCHAR(20) NULL,

description NVARCHAR(500),

calculation_method NVARCHAR(1000),

is_active BIT DEFAULT 1,

created_date DATETIME2 DEFAULT GETDATE(),

CONSTRAINT FK_metric_definitions_category FOREIGN KEY (category_id) REFERENCES ai.metric_categories(category_id),

CONSTRAINT UK_metric_definitions_name UNIQUE (metric_name)

);

-- Business Domains and Use Cases

CREATE TABLE ai.business_domains (

domain_id INT IDENTITY(1,1) PRIMARY KEY,

domain_name NVARCHAR(100) NOT NULL,

industry NVARCHAR(50),

description NVARCHAR(500),

is_active BIT DEFAULT 1

);

CREATE TABLE ai.use_cases (

use_case_id INT IDENTITY(1,1) PRIMARY KEY,

use_case_name NVARCHAR(100) NOT NULL,

domain_id INT NOT NULL,

system_id INT NOT NULL,

description NVARCHAR(500),

risk_level NVARCHAR(20) DEFAULT 'MEDIUM', -- 'LOW', 'MEDIUM', 'HIGH', 'CRITICAL'

compliance_requirements NVARCHAR(500),

is_active BIT DEFAULT 1,

CONSTRAINT FK_use_cases_domain FOREIGN KEY (domain_id) REFERENCES ai.business_domains(domain_id),

CONSTRAINT FK_use_cases_system FOREIGN KEY (system_id) REFERENCES ai.ai_systems(system_id)

);

-- =====================================================

-- CORE MEASUREMENT TABLES

-- =====================================================

-- Main metrics tracking table

CREATE TABLE ai.metric_measurements (

measurement_id BIGINT IDENTITY(1,1) PRIMARY KEY,

system_id INT NOT NULL,

use_case_id INT NULL,

metric_id INT NOT NULL,

measurement_timestamp DATETIME2 DEFAULT GETDATE(),

measurement_date DATE,

measurement_hour TINYINT,

-- Measurement values (only one should be populated based on metric type)

numeric_value FLOAT NULL,

percentage_value FLOAT NULL,

boolean_value BIT NULL,

text_value NVARCHAR(MAX) NULL,

-- Context information

batch_id NVARCHAR(50) NULL,

session_id NVARCHAR(100) NULL,

user_id NVARCHAR(100) NULL,

request_id NVARCHAR(100) NULL,

-- Measurement metadata

measurement_method NVARCHAR(100), -- 'AUTOMATED', 'MANUAL', 'BATCH_PROCESS'

confidence_score FLOAT NULL,

sample_size INT NULL,

data_source NVARCHAR(100),

-- Performance context

system_load_pct FLOAT NULL,

concurrent_users INT NULL,

CONSTRAINT FK_measurements_system FOREIGN KEY (system_id) REFERENCES ai.ai_systems(system_id),

CONSTRAINT FK_measurements_use_case FOREIGN KEY (use_case_id) REFERENCES ai.use_cases(use_case_id),

CONSTRAINT FK_measurements_metric FOREIGN KEY (metric_id) REFERENCES ai.metric_definitions(metric_id)

);

-- Clustered index for time-series queries

CREATE CLUSTERED INDEX IX_measurements_timestamp ON ai.metric_measurements (measurement_timestamp, system_id, metric_id);

-- Additional indexes for common query patterns

CREATE INDEX IX_measurements_system_date ON ai.metric_measurements (system_id, measurement_date) INCLUDE (metric_id, numeric_value);

CREATE INDEX IX_measurements_metric_date ON ai.metric_measurements (metric_id, measurement_date) INCLUDE (numeric_value, percentage_value);

CREATE INDEX IX_measurements_use_case ON ai.metric_measurements (use_case_id, measurement_timestamp) INCLUDE (metric_id, numeric_value);

-- =====================================================

-- SPECIALIZED MEASUREMENT TABLES

-- =====================================================

-- Detailed conversation/interaction tracking

CREATE TABLE ai.interaction_sessions (

session_id NVARCHAR(100) PRIMARY KEY,

system_id INT NOT NULL,

use_case_id INT NULL,

user_id NVARCHAR(100),

start_timestamp DATETIME2 NOT NULL,

end_timestamp DATETIME2 NULL,

session_duration_ms AS (DATEDIFF(MILLISECOND, start_timestamp, end_timestamp)),

total_turns INT DEFAULT 0,

user_satisfaction_score FLOAT NULL,

completion_status NVARCHAR(20), -- 'COMPLETED', 'ABANDONED', 'ESCALATED', 'ERROR'

CONSTRAINT FK_sessions_system FOREIGN KEY (system_id) REFERENCES ai.ai_systems(system_id),

CONSTRAINT FK_sessions_use_case FOREIGN KEY (use_case_id) REFERENCES ai.use_cases(use_case_id)

);

CREATE TABLE ai.interaction_turns (

turn_id BIGINT IDENTITY(1,1) PRIMARY KEY,

session_id NVARCHAR(100) NOT NULL,

turn_number INT NOT NULL,

turn_timestamp DATETIME2 DEFAULT GETDATE(),

-- Input/Output content

input_text NVARCHAR(MAX),

output_text NVARCHAR(MAX),

input_tokens INT,

output_tokens INT,

-- Performance metrics

response_time_ms INT,

processing_time_ms INT,

queue_time_ms INT,

-- Quality scores (computed per turn)

coherence_score FLOAT,

fluency_score FLOAT,

relevance_score FLOAT,

groundedness_score FLOAT,

toxicity_score FLOAT,

bias_score FLOAT,

-- Context tracking

context_length INT,

context_retained BIT,

CONSTRAINT FK_turns_session FOREIGN KEY (session_id) REFERENCES ai.interaction_sessions(session_id),

CONSTRAINT UK_turns_session_number UNIQUE (session_id, turn_number)

);

-- Safety and content analysis results

CREATE TABLE ai.content_analysis (

analysis_id BIGINT IDENTITY(1,1) PRIMARY KEY,

turn_id BIGINT NULL,

measurement_id BIGINT NULL,

analysis_timestamp DATETIME2 DEFAULT GETDATE(),

-- Content safety scores

toxicity_score FLOAT,

hate_speech_score FLOAT,

violence_score FLOAT,

self_harm_score FLOAT,

sexual_content_score FLOAT,

profanity_score FLOAT,

-- Bias detection

gender_bias_score FLOAT,

racial_bias_score FLOAT,

cultural_bias_score FLOAT,

socioeconomic_bias_score FLOAT,

-- Content classification

content_categories NVARCHAR(500), -- JSON array of detected categories

flagged_content BIT DEFAULT 0,

human_review_required BIT DEFAULT 0,

-- Analysis metadata

analyzer_version NVARCHAR(50),

confidence_threshold FLOAT,

CONSTRAINT FK_content_analysis_turn FOREIGN KEY (turn_id) REFERENCES ai.interaction_turns(turn_id),

CONSTRAINT FK_content_analysis_measurement FOREIGN KEY (measurement_id) REFERENCES ai.metric_measurements(measurement_id)

);

-- Performance and resource utilization

CREATE TABLE ai.system_performance (

performance_id BIGINT IDENTITY(1,1) PRIMARY KEY,

system_id INT NOT NULL,

measurement_timestamp DATETIME2 DEFAULT GETDATE(),

-- Response metrics

avg_response_time_ms FLOAT,

p50_response_time_ms FLOAT,

p95_response_time_ms FLOAT,

p99_response_time_ms FLOAT,

-- Throughput metrics

requests_per_second FLOAT,

tokens_per_second FLOAT,

concurrent_sessions INT,

-- Resource utilization

cpu_utilization_pct FLOAT,

memory_utilization_pct FLOAT,

gpu_utilization_pct FLOAT,

disk_io_rate FLOAT,

network_bandwidth_mbps FLOAT,

-- Reliability metrics

error_rate_pct FLOAT,

timeout_rate_pct FLOAT,

retry_rate_pct FLOAT,

availability_pct FLOAT,

-- Scaling metrics

auto_scale_events INT DEFAULT 0,

load_balancer_efficiency FLOAT,

CONSTRAINT FK_performance_system FOREIGN KEY (system_id) REFERENCES ai.ai_systems(system_id)

);

-- =====================================================

-- THRESHOLD AND ALERTING TABLES

-- =====================================================

-- Metric thresholds and SLAs

CREATE TABLE ai.metric_thresholds (

threshold_id INT IDENTITY(1,1) PRIMARY KEY,

system_id INT NOT NULL,

metric_id INT NOT NULL,

use_case_id INT NULL,

-- Threshold values

min_acceptable FLOAT NULL,

max_acceptable FLOAT NULL,

target_value FLOAT NULL,

critical_min FLOAT NULL,

critical_max FLOAT NULL,

-- Alert configuration

alert_enabled BIT DEFAULT 1,

alert_frequency_minutes INT DEFAULT 5,

escalation_threshold INT DEFAULT 3,

-- Validity

effective_date DATE DEFAULT CAST(GETDATE() AS DATE),

expiry_date DATE NULL,

is_active BIT DEFAULT 1,

CONSTRAINT FK_thresholds_system FOREIGN KEY (system_id) REFERENCES ai.ai_systems(system_id),

CONSTRAINT FK_thresholds_metric FOREIGN KEY (metric_id) REFERENCES ai.metric_definitions(metric_id),

CONSTRAINT FK_thresholds_use_case FOREIGN KEY (use_case_id) REFERENCES ai.use_cases(use_case_id)

);

-- Alert log

CREATE TABLE ai.metric_alerts (

alert_id BIGINT IDENTITY(1,1) PRIMARY KEY,

threshold_id INT NOT NULL,

measurement_id BIGINT NULL,

alert_timestamp DATETIME2 DEFAULT GETDATE(),

alert_type NVARCHAR(20) NOT NULL, -- 'WARNING', 'CRITICAL', 'RESOLVED'

alert_severity NVARCHAR(20) NOT NULL, -- 'LOW', 'MEDIUM', 'HIGH', 'CRITICAL'

trigger_value FLOAT,

threshold_breached NVARCHAR(50), -- 'MIN_ACCEPTABLE', 'MAX_ACCEPTABLE', etc.

alert_message NVARCHAR(1000),

acknowledgment_status NVARCHAR(20) DEFAULT 'OPEN', -- 'OPEN', 'ACKNOWLEDGED', 'RESOLVED'

acknowledged_by NVARCHAR(100) NULL,

acknowledged_at DATETIME2 NULL,

resolution_notes NVARCHAR(1000) NULL,

CONSTRAINT FK_alerts_threshold FOREIGN KEY (threshold_id) REFERENCES ai.metric_thresholds(threshold_id),

CONSTRAINT FK_alerts_measurement FOREIGN KEY (measurement_id) REFERENCES ai.metric_measurements(measurement_id)

);

-- =====================================================

-- AGGREGATION AND REPORTING TABLES

-- =====================================================

-- Daily metric aggregations (for performance)

CREATE TABLE ai.daily_metric_summary (

summary_id BIGINT IDENTITY(1,1) PRIMARY KEY,

system_id INT NOT NULL,

metric_id INT NOT NULL,

use_case_id INT NULL,

summary_date DATE NOT NULL,

-- Aggregated values

min_value FLOAT,

max_value FLOAT,

avg_value FLOAT,

median_value FLOAT,

std_deviation FLOAT,

-- Count and quality metrics

measurement_count INT,

valid_measurements INT,

data_quality_score FLOAT,

-- Trending

trend_direction NVARCHAR(10), -- 'UP', 'DOWN', 'STABLE'

change_from_previous FLOAT,

change_percentage FLOAT,

created_timestamp DATETIME2 DEFAULT GETDATE(),

CONSTRAINT FK_daily_summary_system FOREIGN KEY (system_id) REFERENCES ai.ai_systems(system_id),

CONSTRAINT FK_daily_summary_metric FOREIGN KEY (metric_id) REFERENCES ai.metric_definitions(metric_id),

CONSTRAINT FK_daily_summary_use_case FOREIGN KEY (use_case_id) REFERENCES ai.use_cases(use_case_id),

CONSTRAINT UK_daily_summary_unique UNIQUE (system_id, metric_id, use_case_id, summary_date)

);

-- Quality scorecards

CREATE TABLE ai.quality_scorecards (

scorecard_id BIGINT IDENTITY(1,1) PRIMARY KEY,

system_id INT NOT NULL,

use_case_id INT NULL,

scorecard_date DATE NOT NULL,

scorecard_period NVARCHAR(20) NOT NULL, -- 'DAILY', 'WEEKLY', 'MONTHLY'

-- Overall scores

overall_quality_score FLOAT,

performance_score FLOAT,

safety_score FLOAT,

reliability_score FLOAT,

user_experience_score FLOAT,

-- Category scores

accuracy_score FLOAT,

coherence_score FLOAT,

fluency_score FLOAT,

groundedness_score FLOAT,

bias_score FLOAT,

toxicity_score FLOAT,

-- Business metrics

user_satisfaction_score FLOAT,

task_completion_rate FLOAT,

cost_efficiency_score FLOAT,

-- Metadata

calculations_timestamp DATETIME2 DEFAULT GETDATE(),

calculation_method NVARCHAR(100),

data_completeness_pct FLOAT,

CONSTRAINT FK_scorecards_system FOREIGN KEY (system_id) REFERENCES ai.ai_systems(system_id),

CONSTRAINT FK_scorecards_use_case FOREIGN KEY (use_case_id) REFERENCES ai.use_cases(use_case_id),

CONSTRAINT UK_scorecards_unique UNIQUE (system_id, use_case_id, scorecard_date, scorecard_period)

);

-- =====================================================

-- AUDIT AND METADATA TABLES

-- =====================================================

-- Data lineage tracking

CREATE TABLE ai.measurement_lineage (

lineage_id BIGINT IDENTITY(1,1) PRIMARY KEY,

measurement_id BIGINT NOT NULL,

source_system NVARCHAR(100),

source_table NVARCHAR(100),

source_query NVARCHAR(MAX),

extraction_timestamp DATETIME2,

transformation_applied NVARCHAR(500),

data_validator NVARCHAR(100),

validation_status NVARCHAR(20) DEFAULT 'VALID',

validation_notes NVARCHAR(1000),

CONSTRAINT FK_lineage_measurement FOREIGN KEY (measurement_id) REFERENCES ai.metric_measurements(measurement_id)

);

-- Change tracking for configuration

CREATE TABLE ai.configuration_changes (

change_id BIGINT IDENTITY(1,1) PRIMARY KEY,

table_name NVARCHAR(100) NOT NULL,

record_id INT NOT NULL,

change_type NVARCHAR(20) NOT NULL, -- 'INSERT', 'UPDATE', 'DELETE'

changed_by NVARCHAR(100) NOT NULL,

change_timestamp DATETIME2 DEFAULT GETDATE(),

old_values NVARCHAR(MAX), -- JSON

new_values NVARCHAR(MAX), -- JSON

change_reason NVARCHAR(500)

);

-- =====================================================

-- INITIAL DATA POPULATION

-- =====================================================

-- Insert metric categories

INSERT INTO ai.metric_categories (category_name, category_description, display_order) VALUES

('Core Performance', 'Basic accuracy and correctness metrics', 1),

('Response Quality', 'Language quality and semantic metrics', 2),

('Safety & Alignment', 'Content safety and bias detection', 3),

('Reliability & Robustness', 'System reliability and error handling', 4),

('User Experience', 'Engagement and satisfaction metrics', 5),

('Technical Performance', 'Speed, efficiency, and resource usage', 6),

('Domain Specific', 'Industry and use-case specific metrics', 7),

('Advanced Analytics', 'Drift detection and meta-metrics', 8),

('Compliance & Governance', 'Regulatory and policy adherence', 9);

-- Insert sample metric definitions (subset of the 80+ metrics)

INSERT INTO ai.metric_definitions (metric_name, category_id, metric_type, data_type, min_value, max_value, unit_of_measure, description) VALUES

-- Core Performance Metrics

('Accuracy', 1, 'PERCENTAGE', 'FLOAT', 0.0, 100.0, '%', 'Overall correctness of AI responses'),

('Precision', 1, 'PERCENTAGE', 'FLOAT', 0.0, 100.0, '%', 'True positives / (True positives + False positives)'),

('Recall', 1, 'PERCENTAGE', 'FLOAT', 0.0, 100.0, '%', 'True positives / (True positives + False negatives)'),

('F1_Score', 1, 'NUMERIC', 'FLOAT', 0.0, 1.0, 'score', 'Harmonic mean of precision and recall'),

-- Response Quality Metrics

('Coherence_Score', 2, 'NUMERIC', 'FLOAT', 0.0, 10.0, 'score', 'Logical consistency and flow of responses'),

('Fluency_Score', 2, 'NUMERIC', 'FLOAT', 0.0, 10.0, 'score', 'Natural language quality and readability'),

('Relevance_Score', 2, 'NUMERIC', 'FLOAT', 0.0, 10.0, 'score', 'How well response addresses the query'),

('Groundedness_Score', 2, 'NUMERIC', 'FLOAT', 0.0, 10.0, 'score', 'Support by provided sources or knowledge'),

-- Safety & Alignment Metrics

('Toxicity_Score', 3, 'NUMERIC', 'FLOAT', 0.0, 1.0, 'score', 'Toxicity level of generated content'),

('Bias_Score', 3, 'NUMERIC', 'FLOAT', 0.0, 1.0, 'score', 'Overall bias in responses'),

('Hallucination_Rate', 3, 'PERCENTAGE', 'FLOAT', 0.0, 100.0, '%', 'Frequency of unsupported information'),

-- Technical Performance Metrics

('Response_Time_MS', 6, 'NUMERIC', 'INT', 0, NULL, 'ms', 'Time to generate response'),

('Throughput_RPS', 6, 'NUMERIC', 'FLOAT', 0, NULL, 'req/sec', 'Requests processed per second'),

('CPU_Utilization', 6, 'PERCENTAGE', 'FLOAT', 0.0, 100.0, '%', 'CPU usage percentage'),

('Memory_Utilization', 6, 'PERCENTAGE', 'FLOAT', 0.0, 100.0, '%', 'Memory usage percentage'),

-- User Experience Metrics

('User_Satisfaction', 5, 'NUMERIC', 'FLOAT', 1.0, 10.0, 'rating', 'User satisfaction rating'),

('Task_Completion_Rate', 5, 'PERCENTAGE', 'FLOAT', 0.0, 100.0, '%', 'Percentage of successfully completed tasks'),

('Session_Duration', 5, 'NUMERIC', 'INT', 0, NULL, 'seconds', 'Average session duration');

-- =====================================================

-- VIEWS FOR COMMON QUERIES

-- =====================================================

-- Real-time quality dashboard view

CREATE VIEW ai.vw_current_quality_metrics AS

SELECT

s.system_name,

s.version,

uc.use_case_name,

mc.category_name,

md.metric_name,

md.unit_of_measure,

mm.numeric_value,

mm.percentage_value,

mm.measurement_timestamp,

ROW_NUMBER() OVER (PARTITION BY mm.system_id, mm.metric_id ORDER BY mm.measurement_timestamp DESC) as rn

FROM ai.metric_measurements mm

JOIN ai.ai_systems s ON mm.system_id = s.system_id

JOIN ai.metric_definitions md ON mm.metric_id = md.metric_id

JOIN ai.metric_categories mc ON md.category_id = mc.category_id

LEFT JOIN ai.use_cases uc ON mm.use_case_id = uc.use_case_id

WHERE s.is_active = 1 AND md.is_active = 1

AND mm.measurement_timestamp >= DATEADD(HOUR, -24, GETDATE());

-- Performance trend analysis view

CREATE VIEW ai.vw_metric_trends AS

SELECT

system_id,

metric_id,

summary_date,

avg_value,

trend_direction,

change_percentage,

LAG(avg_value, 1) OVER (PARTITION BY system_id, metric_id ORDER BY summary_date) as previous_value,

LAG(avg_value, 7) OVER (PARTITION BY system_id, metric_id ORDER BY summary_date) as value_7_days_ago,

LAG(avg_value, 30) OVER (PARTITION BY system_id, metric_id ORDER BY summary_date) as value_30_days_ago

FROM ai.daily_metric_summary

WHERE summary_date >= DATEADD(DAY, -90, GETDATE());

Leave a reply to The Foundation of AI Success Part III: Why AI Quality Metrics Are Critical for AI Solutions – Ross McNeely Cancel reply