The data engineering landscape is undergoing a transformative shift. As organizations race to harness the power of AI agents to automate data flows and generate insights. How do you speed up data engineering to deliver data quality to meet the demands of AI projects? The same tool that can enable AI Agents to connect to data sources can also be used to assist Data Engineers.

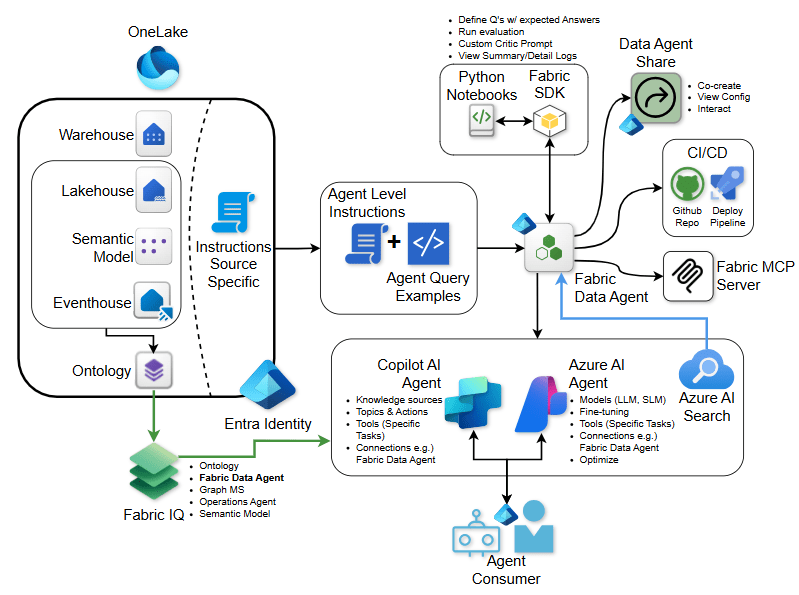

Microsoft Fabric MCP: Context aware development

Microsoft Fabric has introduced an innovative approach to MCP that prioritizes developer control and AI-assisted authoring. The Fabric MCP is a local, developer-focused framework that packages the platform’s complete API specifications, item definitions, and best practices into a unified context layer.

What makes Fabric MCP unique is its security model: it runs locally on your machine and provides AI agents with the context they need to generate code without actually accessing your production environment. You remain in full control, reviewing and deciding when to execute AI-generated code.

Key capabilities include:

Complete API context: AI agents can browse catalogs of all supported workloads and fetch detailed request/response schemas for Lakehouses, pipelines, semantic models, notebooks, and Real-Time Intelligence workloads

Item definition knowledge: Exposes JSON schemas for every Fabric item type, helping agents understand data structures, constraints, and defaults

AI-assisted authoring: Generate or update Fabric items with AI while maintaining human oversight and control

Cross-platform workflows: Orchestrate scenarios that span multiple services using the same open standard

For data engineers working with Fabric’s Real-Time Intelligence, there’s also a specialized MCP server that enables AI agents to execute KQL queries against Eventhouse, providing natural language interfaces for real-time data analysis with schema discovery capabilities.

Databricks MCP: Flexible Options for Every Use Case

Databricks is offering three distinct MCP deployment options to match different organizational needs:

1. Databricks-Managed MCP Servers: Ready-to-use servers that let agents query data and access Unity Catalog functions immediately. These servers enforce Unity Catalog permissions automatically, ensuring agents and users can only access authorized tools and data. They support both OAuth and PAT authentication and provide instant access to Databricks services like Genie and Unity Catalog functions.

2. External MCP Servers: Connect to third-party MCP servers hosted outside Databricks using managed proxies and Unity Catalog connections. This option leverages Databricks to manage authentication through OAuth flows and token refresh, providing secure proxy authentication to external services and APIs.

3. Custom MCP Servers: Host your own MCP server as a Databricks app, giving you complete control to bring custom tools and specialized business logic. This option offers maximum flexibility for unique enterprise requirements, though it requires you to configure authentication and authorization yourself.

The Databricks’ approach is that it standardizes how agents interact with data while providing flexibility in deployment models. Data engineers can choose the option that best fits their security requirements, governance model, and use case complexity.

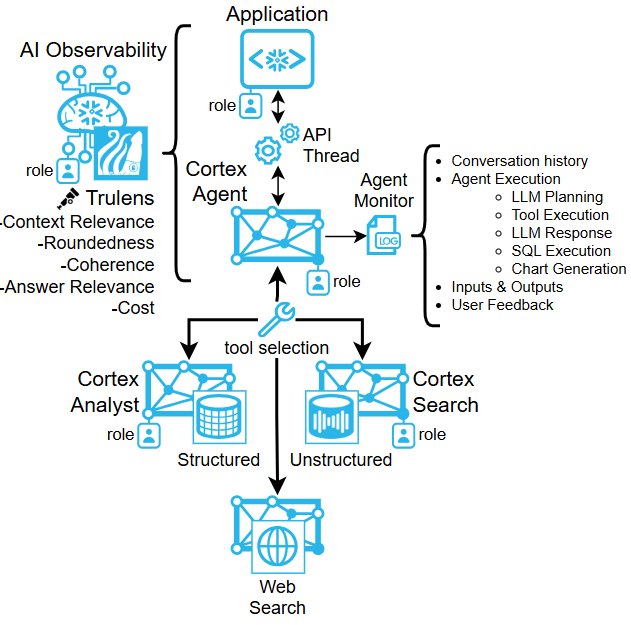

Snowflake MCP: Enterprise Grade Governance Meets AI Agents

Snowflake’s managed MCP server implementation focuses squarely on security and governance, providing AI agents with an open-standards-based interface to connect with governed enterprise data.

The Snowflake MCP server is fully managed with no additional infrastructure to deploy, no separate compute charges for the server itself. It integrates directly with Snowflake’s governance model, meaning the same role-based access controls, data masking policies, and security boundaries that protect your data also extend to AI agent interactions.

Standout features include:

Cortex Analyst integration: Translates natural language requests into SQL queries that run against governed data, providing insights into structured information

Cortex Search capabilities: Enables semantic search and retrieval from unstructured documents stored in or indexed by Snowflake

Cortex Knowledge Extensions: Access licensed content from premium publishers like The Associated Press, The Washington Post, and Stack Overflow, complete with proper attribution

Consistent authentication: Built-in OAuth 2.0 support ensures secure, standards-compliant authentication for MCP integrations

Flexible configuration: Define tools in different database schemas aligned with existing policies, and create multiple MCP servers scoped to specific use cases

For data engineering teams, this means AI agents can retrieve both structured analytics and unstructured document insights while remaining within Snowflake’s secure governance boundary—no data movement, no separate security layers to manage.

The question is no longer if AI will transform data engineering, but how quickly organizations can adopt open standards like MCP to accelerate that transformation while maintaining security and governance. For forward-thinking data teams, now is the time to explore these capabilities and start building transformations quickly.

Leave a comment