When scoping Data and AI projects, the technical element can determine the difference between transformative success and costly failure. This isn’t just about choosing the right tools, it’s about building a comprehensive understanding of what your organization can realistically achieve and what infrastructure you need to get there. I’ve observed that projects with clearly defined technical requirements and honest capability assessments consistently outperform those that skip this crucial step. Let’s explore a practical framework for getting this right.

The Capability Assessment Framework: Know Where You Stand

Before defining what you need, you must understand what you have. A comprehensive capability assessment forms the foundation of realistic project scoping.

Current State Analysis

Begin with a thorough evaluation of your existing data infrastructure and AI maturity. This means cataloging your current data storage systems, processing capabilities, and any existing machine learning or analytics tools. Don’t just document what you own, assess what is actually functional, scalable, and reliable.

Map out your data pipelines, understanding both their capacity and limitations. Identify bottlenecks in data flow, processing speed, and storage accessibility. This honest inventory prevents unrealistic expectations and helps identify immediate upgrade needs. Example inventory list below.

- Data Sources (Systems, Devices)

- Data Storage Platforms (Relational, Document, Key-Value, Columnar, Graph)

- Data Integration (Batch, Streaming)

- Data Quality (DQ, Master Data, Reference Data)

- Data Modeling (Normalized, De-normalized, etc)

- Data Warehouse (Medallion, Operational Data Store)

- Data Science ML/AI (Models, Deployment Locals)

- Generative AI (OpenAI, AI Search, Content Safety, etc)

Gap Analysis

With your current state documented, compare it against your project requirements. Where do critical gaps exist? Perhaps your data storage can handle current volumes but won’t scale to project demands. Maybe your team has strong analytics skills but lacks machine learning expertise. Every identified gap represents a specific action item that can be addressed through technology acquisition, training, or strategic partnerships.

Technical Readiness Assessment

Data Sources Assessment Evaluate system integration capabilities by cataloging all source systems (ERP, CRM, IoT devices, external APIs) and assessing their API availability, data extraction methods, and real-time vs batch capabilities. Test data accessibility, authentication mechanisms, and rate limiting constraints. Document data volume, velocity, and variety from each source to understand scalability requirements.

Data Storage Platform Evaluation Assess current storage infrastructure against ML/AI workload requirements by evaluating query performance, scalability limits, and ACID compliance for relational databases. Test document stores for schema flexibility and JSON handling, evaluate key-value stores for caching and session management, assess columnar databases for analytical workloads, and validate graph databases for relationship-heavy data modeling.

Data Integration Readiness Evaluate ETL/ELT pipeline maturity by testing batch processing capabilities with sample datasets, assessing real-time streaming infrastructure using tools like Kafka or Azure Event Hubs, and measuring data latency requirements for IoT devices. Test change data capture (CDC) mechanisms and validate data synchronization across multiple systems.

Data Quality Assessment Implement data profiling to measure completeness, accuracy, consistency, and timeliness metrics. Establish master data management processes for customer, product, and reference data entities. Create data quality scorecards with automated monitoring for null values, duplicates, format inconsistencies, and business rule violations. Test data lineage tracking capabilities.

Data Modeling Evaluation Assess existing data models for analytical readiness by evaluating normalized structures for transactional efficiency versus denormalized designs for analytical performance. Test star schema implementations for data warehousing and validate dimensional modeling approaches. Evaluate schema evolution capabilities for changing business requirements.

Data Warehouse Architecture Assessment Evaluate medallion architecture implementation with bronze (raw), silver (cleaned), and gold (business-ready) layers. Test operational data store performance for real-time analytics needs. Assess data warehouse query performance, partitioning strategies, and incremental loading capabilities. Validate backup, recovery, and disaster recovery procedures.

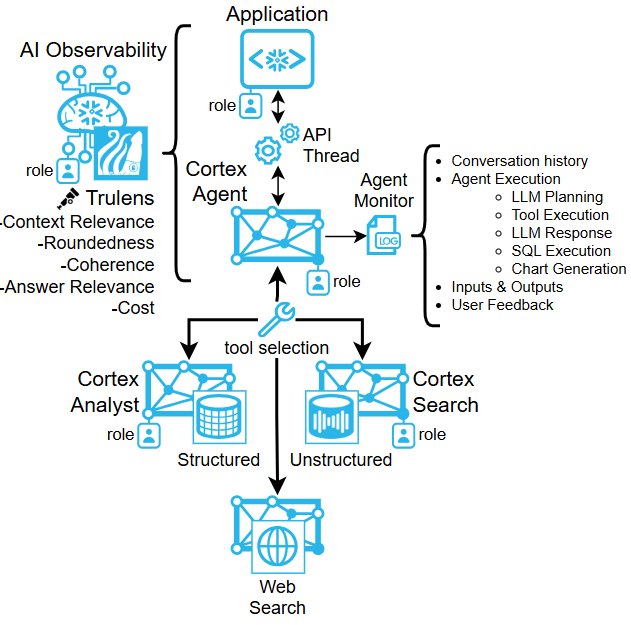

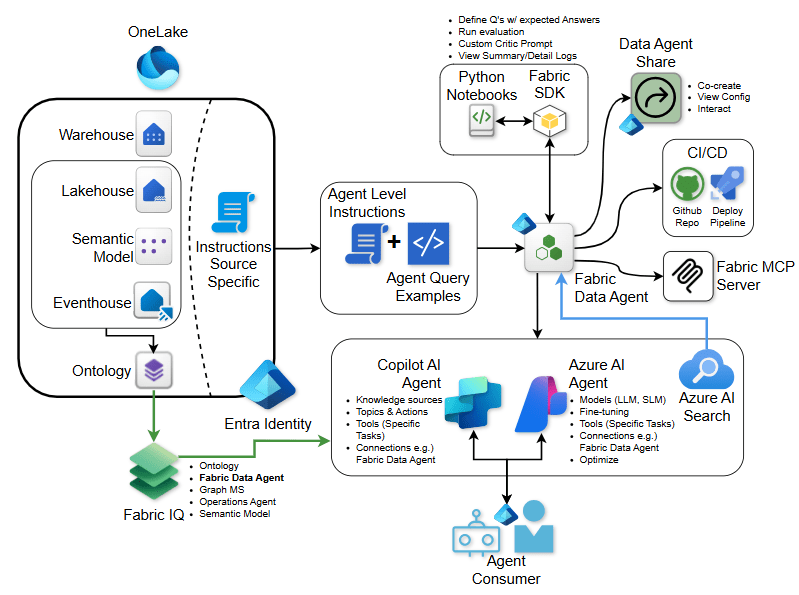

Data Science ML/AI Readiness Assess MLOps maturity by evaluating model development environments, experiment tracking capabilities, and automated training pipelines. Test model deployment options (containers, serverless, edge), validate model monitoring and drift detection, and assess A/B testing infrastructure. Evaluate compute resources for training and inference workloads.

Generative AI Platform Assessment Test Azure OpenAI integration capabilities, API rate limits, and cost optimization strategies. Evaluate AI Search implementation for retrieval-augmented generation (RAG) patterns, assess content safety filters and moderation capabilities, and validate prompt engineering and fine-tuning processes. Test vector database integration for semantic search capabilities.

Cross-Cutting Technical Readiness Factors Evaluate security and compliance frameworks (encryption, access controls, audit trails), assess monitoring and alerting capabilities, test disaster recovery and business continuity plans, and validate cost management and resource optimization strategies across all components.

Future State

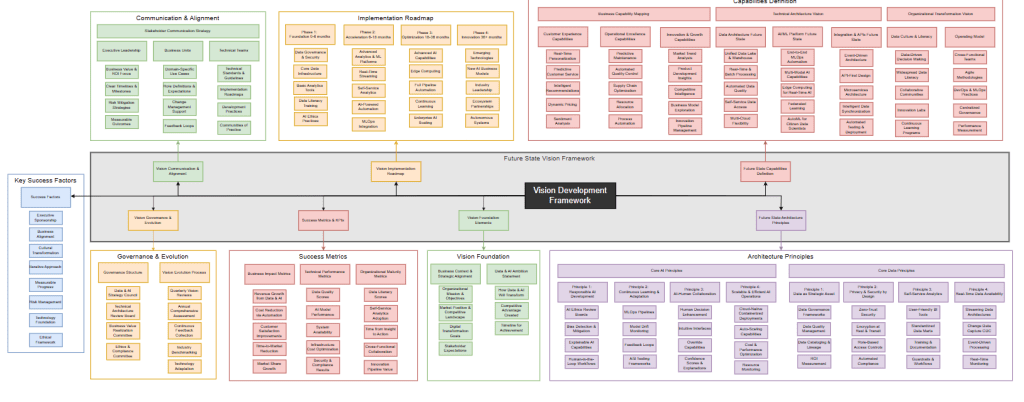

The “Future State Analysis” serves as the North Star for any successful Data and AI Architecture initiative, providing essential direction and coherence to what could become a fragmented collection of technological implementations. Without a clearly articulated vision of where the organization aims to be in its Data and AI maturity journey, architectural decisions tend to be reactive, siloed, and ultimately suboptimal. The Future State acts as a strategic blueprint that defines not just the technical capabilities an organization wants to achieve, but also how Data and AI will fundamentally transform business processes, decision making, and competitive positioning. Creating a forward-looking perspective ensures that architectural investments are purposeful and aligned with long-term organizational objectives rather than driven by short-term tactical needs or the latest technological trends.

Creating a Future Vision

Creating a comprehensive Data and AI vision before diving into technical requirements is crucial for translating business aspirations into actionable architectural components. A vision first approach enables organizations to identify the specific capabilities they need to develop. Whether that’s real-time analytics, automated decision-making, personalized customer experiences, or predictive operational insights. By starting with the end state in mind, architects can work backward to determine the data infrastructure, AI platforms, governance frameworks, and integration patterns required to support these capabilities. This methodology prevents the common pitfall of implementing impressive but disconnected technologies that fail to deliver meaningful business value. Moreover, a well-articulated vision serves as a communication bridge between business stakeholders and technical teams, ensuring that everyone understands not just what is being built, but why it matters and how it contributes to the organization’s strategic goals.

Transforming Requirements into Action

Defining Data and AI capabilities and technology requirements is not an academic exercise. It is the foundation for successful project execution. Organizations that invest time and expertise in thorough technical scoping create realistic project plans, avoid costly architectural changes, and deliver sustainable business value.

The frameworks presented here, refined through dozens of real-world engagements, provide a structured approach to one of the most challenging aspects of Data and AI project scoping. By systematically addressing capability assessment, technology selection, performance requirements, security considerations, resource constraints, and operational readiness, organizations can transform ambitious visions into achievable technical realities.

The difference between successful and failed Data and AI projects often comes down to this single scoping element. Those who master it build lasting competitive advantages. Those who skip it learn expensive lessons about the importance of technical planning.

Leave a comment